AI Integration

The AI Integration Microservice serves as a centralized component designed to manage and simplify integrations with various AI providers. Currently, the microservice is integrated with OpenAI, allowing interaction with its AI capabilities.

To create an AI-powered agent in XR Creator, you first need to set up an assistant in OpenAI and then connect it to your agent in the XR Creator.

1. How to Create an OpenAI Assistant

Follow these steps to set up an assistant in OpenAI, which will power your AI agent in the metaverse.

Step 1: Create an OpenAI Account

- Visit the OpenAI Signup Page and register an account.

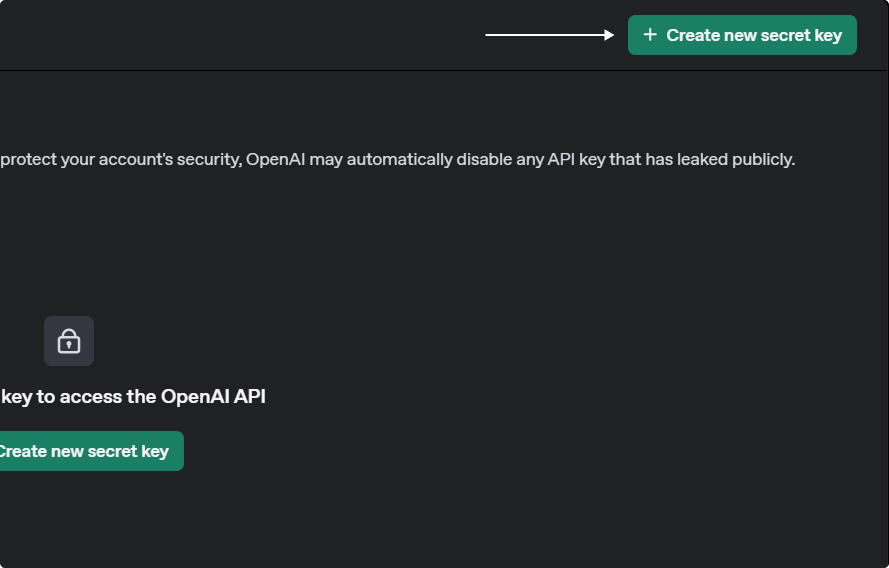

Step 2: Generate an OpenAI API Key

- Go to the OpenAI Dashboard.

- Navigate to the API Keys Section.

- Click Create new secret key.

- Provide a name tag, select a project, and set security permissions.

- Copy and store your API key safely, as it will not be shown again.

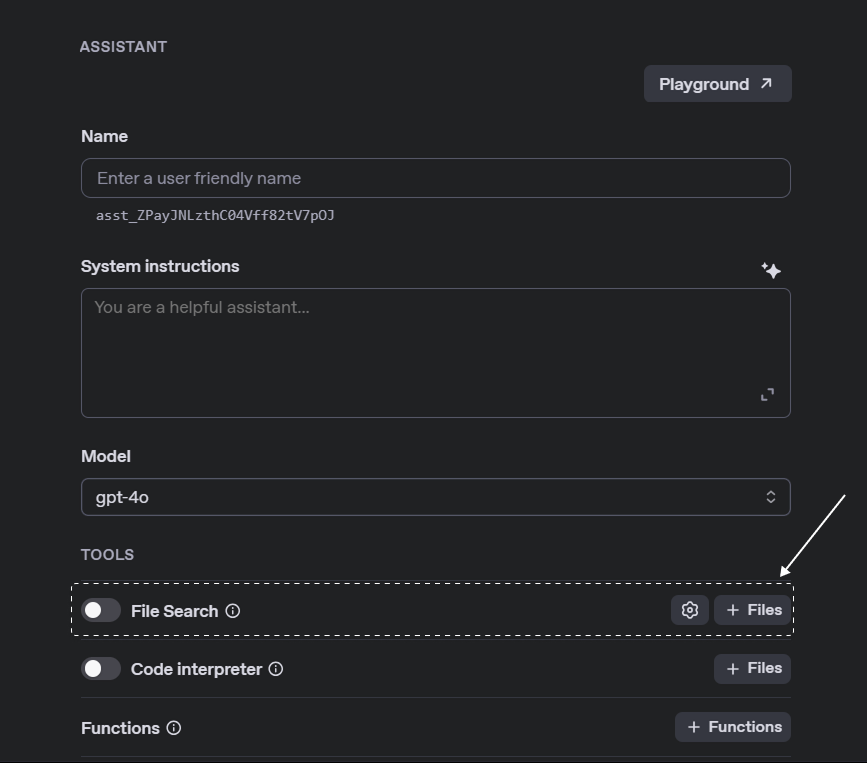

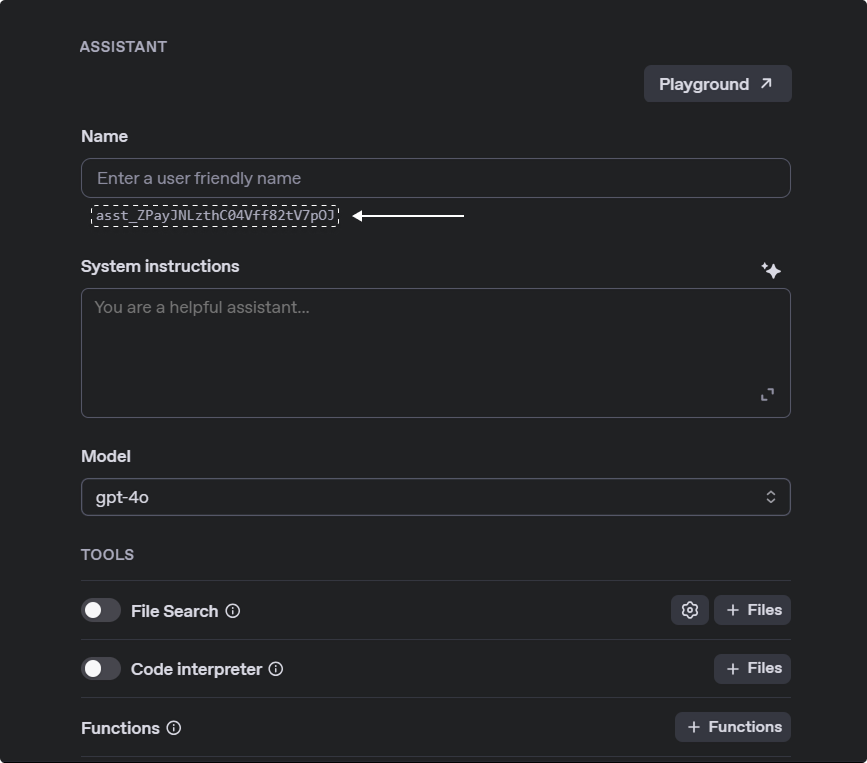

Step 3: Create an Assistant

- Open the Assistant Page.

- Click Create to start a new assistant.

- Enter a name and provide system instructions (up to 256,000 characters).

- Upload necessary files for the assistant’s knowledge base.

- Enable the File Search tool for retrieving information.

- Copy the Assistant ID for future use, you can find it below the name of your assistant.

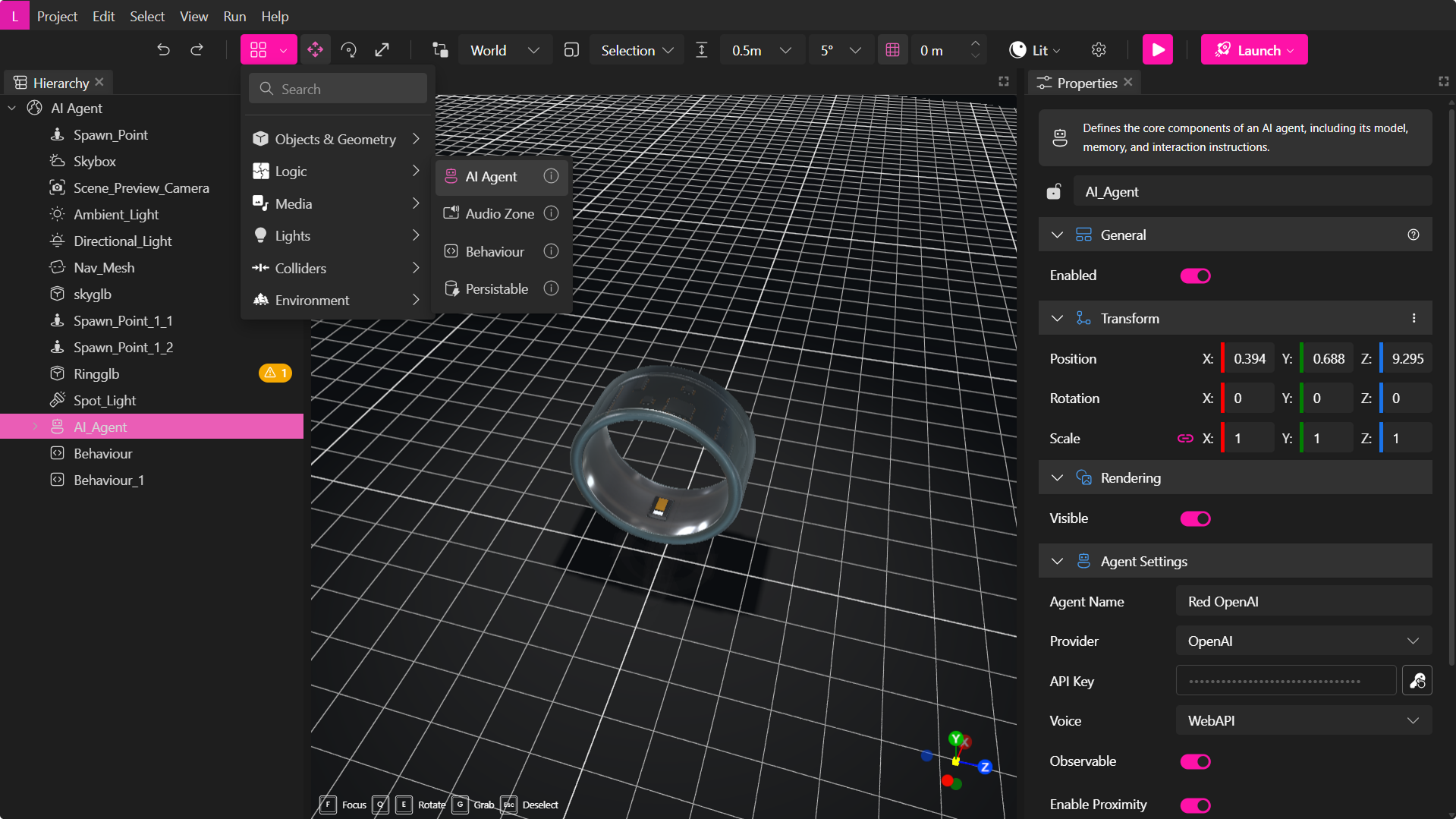

2. How to Create AI Agents in the XR Editor

Once you’ve set up an OpenAI assistant, you can integrate it into your XR project.

Step 1: Create an AI Agent in XR Creator

- Open your project in the XR Editor.

- In the Elements Menu, select AI Agent.

Step 2: Configure AI Agent Properties

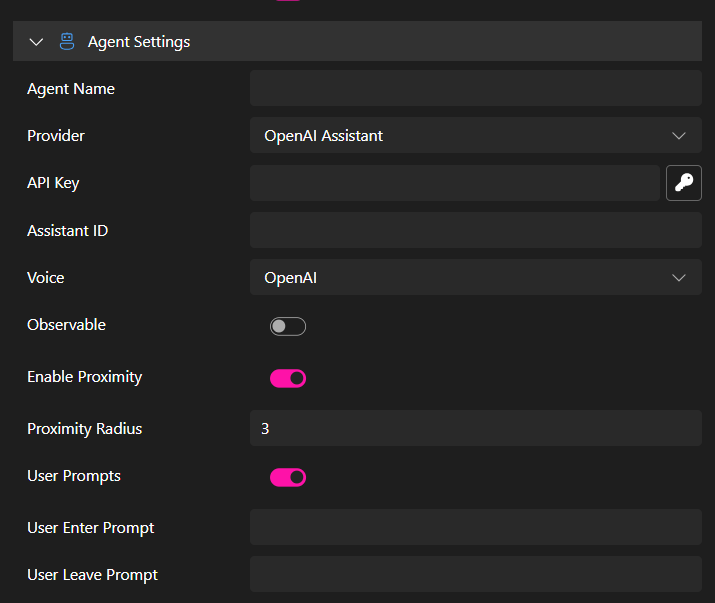

In the Properties Panel, you can set parameters to connect your assistant.

- Provider: Select the AI service (currently supports OpenAI).

- OpenAI API Key: Enter your secret API key securely.

- Agent Name: This name will appear in the chat interface.

- Assistant ID: This is the unique identifier (

asst_...) from OpenAI. Which you can find in the Assistant page. - Voice Options:

- OpenAI – Uses OpenAI’s voice generation.

- WebAPI – Uses browser-based text-to-speech (varies by browser).

- Silent – The agent will only communicate via text chat.

- Emit Events: Enable this to trigger in-world scripting events.

- Enable Proximity: The agent can detect when a user enters or exits a defined range.

Only the OpenAI API Key and the Assistant ID are not optional. Once your properties are set up, you can publish your project and have a fully functional Agent.

3. AI Agent Event System

When Emit Events is enabled, the AI Agent can trigger various interactions:

| Event | Description |

|---|---|

user-enter | Triggered when a user user enters the proximity region of the agent. This event is dispatched only if ‘Emit events’ is enabled and ‘Enable proximity’ is activated in the AI Agent component. |

user-leave | Triggered when a user leaves the AI Agent’s proximity region. It is dispatched if 'Emit events' is enabled and ‘Enable proximity’ is being used. |

agent-talk-start | Emitted when the agent begins speaking. When the audio is played with the play button, it is also emitted whenever the voice synthesis sequence starts. |

agent-talk-talking | Dispatched during the agent's speech in each frame, sending the modulated amplitude of the sound wave. When subscribed to this event, the sound wave arrives as a float value as a parameter of the event handlers, amp is similar to the value used in MUDz for the scale animation of avatars when they speak. Here, we would take it to send the audio value of the agent speaking. |

agent-talk-end | Triggered when the agent finishes speaking. |

agent-thinking | Dispatched when the agent is thinking, i.e., when the dots are shown in the chat. |